Kakao Games set to release Norse-themed MMORPG Odin: Valhalla Rising worldwide Game has surpassed 17 million downloads across Asia Discover four of the nine realms with near-seamless expl

Author: MiaReading:3

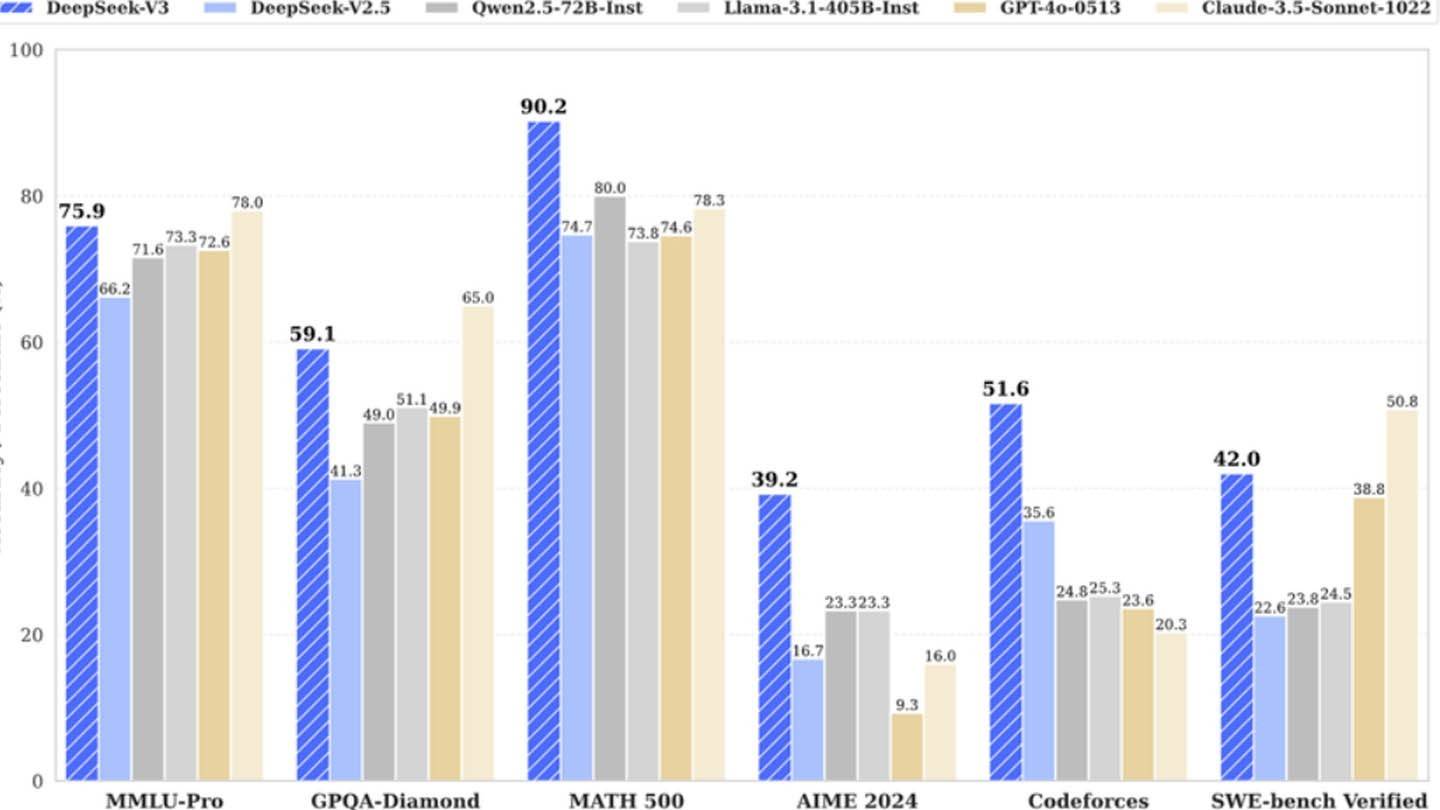

DeepSeek's surprisingly inexpensive AI model challenges industry norms. While DeepSeek boasts a mere $6 million training cost for its DeepSeek V3 model, a closer look reveals a far more substantial investment.

Image: ensigame.com

Image: ensigame.com

DeepSeek V3 leverages innovative technologies: Multi-token Prediction (MTP) for simultaneous word prediction, Mixture of Experts (MoE) utilizing 256 neural networks, and Multi-head Latent Attention (MLA) for enhanced focus on crucial sentence elements. These advancements contribute to both accuracy and efficiency.

Image: ensigame.com

Image: ensigame.com

However, SemiAnalysis uncovered a significant discrepancy. DeepSeek's infrastructure actually comprises around 50,000 Nvidia Hopper GPUs, a $1.6 billion investment with $944 million in operational costs. This contrasts sharply with the publicized $6 million training figure, which only reflects pre-training GPU usage, excluding research, refinement, data processing, and infrastructure.

Image: ensigame.com

Image: ensigame.com

DeepSeek, a subsidiary of High-Flyer, owns its data centers, fostering control and rapid innovation. Its self-funded status and high salaries (over $1.3 million annually for some researchers) attract top Chinese talent. Despite the $500 million+ investment in AI development, its streamlined structure enables efficient innovation.

Image: ensigame.com

Image: ensigame.com

While DeepSeek's success showcases the potential of well-funded independent AI companies, the "revolutionary budget" claim is misleading. The true cost is far higher, yet still significantly less than competitors like ChatGPT4o, which reportedly cost $100 million to train, compared to DeepSeek's $5 million for R1. The disparity highlights DeepSeek's efficiency despite its substantial investment.

LATEST ARTICLES

LATEST ARTICLES